Announcing CVMFS CSI v2

We are glad to announce the release of cvmfs-csi v2.0.0 bringing several cool features and making access to CVMFS repositories inside Kubernetes a lot easier. This is a large overhaul of the first version of the driver, see below for some history and details of how things improved.

What is CVMFS

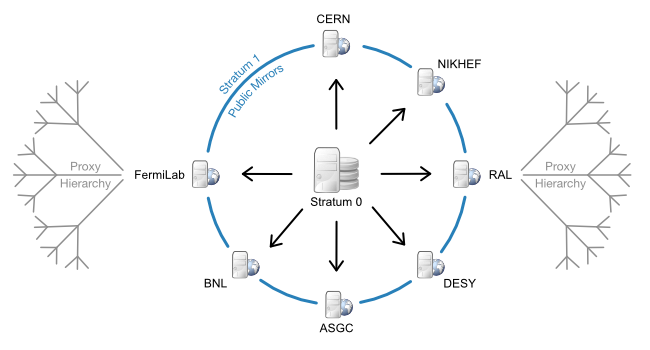

CVMFS is the CernVM File System. A scalable, reliable and low-maintenance software distribution service developed to assist high energy physics experiments to deploy software on the worldwide distributed computing grid infrastructure.

It exposes a POSIX read-only filesystem in user space via a FUSE module, with a universal namespace - /cvmfs - and the backend relying on a hierarchical structure of standard web servers.

Exposing CVMFS repositories to containerized deployments, first with Docker and then Kubernetes, has been one of our first requirements.

First integration: Docker and FlexVolume

The first integration in the early container days at CERN included a docker volume plugin and right after the integration with Kubernetes via a FlexVolume. A Kubernetes manifest was similar to any other volume type:

volumes:

- name: atlas

flexVolume:

driver: "cern/cvmfs"

options:

repository: "atlas.cern.ch"

After defining a flexVolume volume in a Pod spec users could access the CVMFS repository from within their application. This worked well and for Kubernetes 1.6 this was the best way of exposing storage systems that didn’t have direct, in-tree support inside Kubernetes. However, the design of the FlexVolume plugin API itself had many limitations. For example:

- The API offered only attaching and mounting

- The plugin(s) had to be available on nodes where they’d be run directly by kubelet

- It was assumed that all mount dependencies (mount and filesystem tools) these plugins used were available on the host

For these reasons and others the Flex volume plugins were later deprecated, making the CVMFS FlexVolume plugin no longer a viable option.

Second Round: Arrival of CSI

Things changed with the arrival of the CSI (Container Storage Interface) in 2017, developed as a standard for exposing arbitrary block and file storage systems to containerized workloads on systems like Kubernetes.

Once the interface got a bit more stable we created a CSI driver for CVMFS, with the first release in 2018 offering mounts of single CVMFS repositories. This involved defining both a StorageClass and a PersistentVolumeClaim as in this example:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-cvmfs-cms

provisioner: cvmfs.csi.cern.ch

parameters:

# Repository address.

repository: cms.cern.ch

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-cvmfs-cms-pvc

spec:

accessModes:

- ReadOnlyMany

resources:

requests:

# The volume size is arbitrary as it is ignored by the driver.

storage: 1Gi

storageClassName: csi-cvmfs-cms

Pods would then mount it like any other PVC. For simple setups this was good enough but for applications that use many repositories this quickly becomes hard to scale, as:

- The number of PVCs is proportional to the number of CVMFS repositories used

- The list of repositories must be known during deployment time of application Pods

- The client configuration is loaded only during deployment time of the driver

Previous alternatives

Due to the missing features and faults of the first version of the CSI driver a few alternatives appeared in the community: CERN Sciencebox CVMFS driver, PRP OSG driver, docker-cvmfs to name a few.

All of these are designed similarly with a DaemonSet deployment exposing /cvmfs or individual repositories on a hostPath, and application mounting them from the host. This approach works well enough for many cases, but misses things like declaring them explicitly as PersistentVolumes, full integration and validation with the Kubernetes storage stack, reporting of failed mounts, monitoring, etc.

Many deployments also prevent usage of hostPath from user Pods.

Meet cvmfs-csi v2

The new CSI driver tackles all the issues above, with the main feature being the introduction of automounts. With one PVC users can now mount any and all repositories, on-demand, just by accessing them.

Here’s an example manifest:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: cvmfs

provisioner: cvmfs.csi.cern.ch

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cvmfs

spec:

accessModes:

- ReadOnlyMany

resources:

requests:

# Volume size value has no effect and is ignored

# by the driver, but must be non-zero.

storage: 1

storageClassName: cvmfs

---

apiVersion: v1

kind: Pod

metadata:

name: cvmfs-demo

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: my-cvmfs

mountPath: /my-cvmfs

# CVMFS automount volumes must be mounted with HostToContainer mount propagation.

mountPropagation: HostToContainer

volumes:

- name: my-cvmfs

persistentVolumeClaim:

claimName: cvmfs

The StorageClass cvmfs is pre-defined in CERN Kubernetes clusters.

After creating the cvmfs-demo Pod, repositories can be accessed like so:

$ kubectl exec -it cvmfs-demo -- /bin/sh

~ # ls -l /my-cvmfs

total 0

Note no content is shown in the directory as no repo has been accessed yet. They are loaded on demand the first time they are requested, like shown in these examples for atlas.cern.ch and cms.cern.ch:

~ # ls -l /my-cvmfs/atlas.cern.ch

total 1

drwxr-xr-x 10 999 997 16 Feb 29 2020 repo

~ # ls -l /my-cvmfs/cms.cern.ch

total 1282

drwxr-xr-x 8 999 997 4 Aug 19 2015 CMS@Home

drwxr-xr-x 19 999 997 4096 Apr 11 08:02 COMP

-rw-rw-r-- 1 999 997 429 Feb 12 2016 README

-rw-rw-r-- 1 999 997 282 Feb 18 2014 README.cmssw.git

-rw-rw-r-- 1 999 997 61 Jul 13 2016 README.grid

-rw-r--r-- 1 999 997 341 Apr 23 2019 README.lhapdf

...

Another big change in the new version is the support for multiple CVMFS client configurations via a ConfigMap. Previously this could only be done in the driver deployment configuration, meaning a restart of the CSI driver was needed. With the new version once the ConfigMap is updated all new mounts can rely on the new settings, with no impact on existing mounts. Here’s an example for the DESY instance:

data:

ilc.desy.de.conf: |

CVMFS_SERVER_URL='http://grid-cvmfs-one.desy.de:8000/cvmfs/@fqrn@;...'

CVMFS_PUBLIC_KEY='/etc/cvmfs/config.d/ilc.desy.de.pub'

ilc.desy.de.pub: |

-----BEGIN PUBLIC KEY-----

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEA3pgrEIimdCPWG9cuhQ0d

...

-----END PUBLIC KEY-----

Next steps

We still want to continue evolving the plugin. The immediate focus will be on:

- Support for Volume Health Monitoring to detect abnormal volume conditions and report them as events to the

PVCsorPods - (Re)Add support for CVMFS

TagsandHashes, a feature that was present in the previous driver version but that is not very popular among CVMFS users – according to the CVMFS team this is barely used, if at all. Still, we would like to make sure we support this in the near future

If you are a user of the CERN Kubernetes service, the new driver is available in all cluster templates for Kubernetes >=1.24. Check out our docs.

If you have other Kubernetes deployments and need access to CVMFS repos, please try the new version and give any feedback in our new home! The driver now lives next to the CVMFS core components, where it belongs.